mindspore.ops.silu

- mindspore.ops.silu(input)[source]

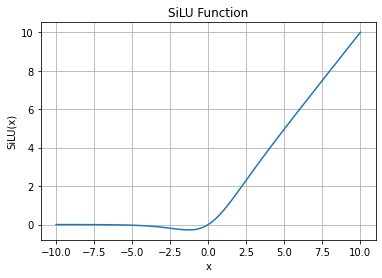

Computes Sigmoid Linear Unit of input element-wise, also known as Swish function. The SiLU function is defined as:

\[\text{SiLU}(x) = x * \sigma(x),\]where \(x\) is an element of the input, \(\sigma(x)\) is Sigmoid function.

\[\text{sigma}(x_i) = \frac{1}{1 + \exp(-x_i)},\]SiLU Function Graph:

- Parameters

input (Tensor) – input is \(x\) in the preceding formula. Input with the data type float16 or float32.

- Returns

Tensor, with the same type and shape as the input.

- Raises

TypeError – If dtype of input is neither float16 nor float32.

- Supported Platforms:

AscendGPUCPU

Examples

>>> import mindspore >>> from mindspore import Tensor, ops >>> import numpy as np >>> input = Tensor(np.array([-1, 2, -3, 2, -1]), mindspore.float16) >>> output = ops.silu(input) >>> print(output) [-0.269 1.762 -0.1423 1.762 -0.269]