mindspore.ops.celu

- mindspore.ops.celu(x, alpha=1.0)[source]

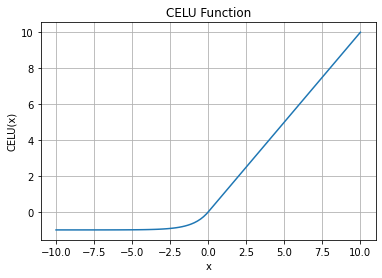

celu activation function, computes celu (Continuously differentiable exponential linear units) of input tensors element-wise. The formula is defined as follows:

\[\text{CeLU}(x) = \max(0,x) + \min(0, \alpha * (\exp(x/\alpha) - 1))\]For more details, please refer to celu.

Warning

This is an experimental API that is subject to change or deletion.

CELU Activation Function Graph:

- Parameters

- Returns

Tensor, has the same data type and shape as the input.

- Raises

TypeError – If alpha is not a float.

TypeError – If x is not a Tensor.

TypeError – If dtype of x is neither float16 nor float32.

ValueError – If alpha has the value of 0.

- Supported Platforms:

AscendGPUCPU

Examples

>>> import mindspore >>> import numpy as np >>> from mindspore import Tensor, ops >>> x = Tensor(np.array([-2.0, -1.0, 1.0, 2.0]), mindspore.float32) >>> output = ops.celu(x, alpha=1.0) >>> print(output) [-0.86466473 -0.63212055 1. 2. ]