二维Taylor-Green涡流动

本案例要求MindSpore版本 >= 2.0.0调用如下接口: mindspore.jit,mindspore.jit_class,mindspore.jacrev。

概述

在流体力学中,Taylor-Green涡流动是一种不稳定的衰减的涡流,在二维周期性边界条件时存在精确解,物理启发的神经网络方法(Physics-informed Neural Networks),以下简称PINNs,通过使用逼近控制方程的损失函数以及简单的网络构型,为快速求解复杂流体问题提供了新的方法。本案例将基于PINNs方法实现二维Taylor-Green涡流动的仿真。

技术路径

MindSpore Flow求解该问题的具体流程如下:

创建数据集。

构建模型。

优化器。

NavierStokes2D。

模型训练。

模型推理及可视化。

导入所需要的包

[1]:

import time

import numpy as np

import sympy

import mindspore

from mindspore import nn, ops, jit, set_seed

from mindspore import numpy as mnp

下述src包可以在 applications/physics_driven/navier_stokes/taylor_green/src下载。

[1]:

from mindflow.cell import MultiScaleFCCell

from mindflow.utils import load_yaml_config

from mindflow.pde import NavierStokes, sympy_to_mindspore

from src import create_training_dataset, create_test_dataset, calculate_l2_error, NavierStokes2D

set_seed(123456)

np.random.seed(123456)

下述taylor_green_2D.yaml配置文件可以在applications/physics_driven/navier_stokes/taylor_green/configs/taylor_green_2D.yaml下载。

[2]:

mindspore.set_context(mode=mindspore.GRAPH_MODE, device_target="GPU", device_id=0, save_graphs=False)

use_ascend = mindspore.get_context(attr_key='device_target') == "Ascend"

config = load_yaml_config('taylor_green_2D.yaml')

创建数据集

训练数据集通过create_training_dataset函数导入,数据集分为区域内数据点、初始条件点、边界条件点,即[‘time_rect_domain_points’, ‘time_rect_IC_points’, ‘time_rect_BC_points’],均采用mindflow.geometry相应接口采样,内容在/src/dataset.py中的create_training_dataset函数中。

测试数据集通过create_test_dataset函数导入。本案例使用 J Kim, P Moin, Application of a fractional-step method to incompressible Navier-Stokes equations, Journal of Computational Physics, Volume 59, Issue 2, 1985中给出的精确解构建验证集。

本案例考虑一个大小为 \(2\pi \times 2\pi\) 的正方形区域在 \(t \in (0,2)\) 时段的aylor-Green涡流动仿真。该问题的精确解为:

[2]:

# create training dataset

taylor_dataset = create_training_dataset(config)

train_dataset = taylor_dataset.create_dataset(batch_size=config["train_batch_size"],

shuffle=True,

prebatched_data=True,

drop_remainder=True)

# create test dataset

inputs, label = create_test_dataset(config)

构建模型

本示例使用一个简单的全连接网络,深度为6层,每层128个神经元,激活函数是tanh函数。

[3]:

coord_min = np.array(config["geometry"]["coord_min"] + [config["geometry"]["time_min"]]).astype(np.float32)

coord_max = np.array(config["geometry"]["coord_max"] + [config["geometry"]["time_max"]]).astype(np.float32)

input_center = list(0.5 * (coord_max + coord_min))

input_scale = list(2.0 / (coord_max - coord_min))

model = MultiScaleFCCell(in_channels=config["model"]["in_channels"],

out_channels=config["model"]["out_channels"],

layers=config["model"]["layers"],

neurons=config["model"]["neurons"],

residual=config["model"]["residual"],

act='tanh',

num_scales=1,

input_scale=input_scale,

input_center=input_center)

优化器

[5]:

params = model.trainable_params()

optimizer = nn.Adam(params, learning_rate=config["optimizer"]["initial_lr"])

模型训练

使用MindSpore>= 2.0.0的版本,可以使用函数式编程范式训练神经网络。

[7]:

def train():

problem = NavierStokes2D(model, re=config["Re"])

if use_ascend:

from mindspore.amp import DynamicLossScaler, auto_mixed_precision, all_finite

loss_scaler = DynamicLossScaler(1024, 2, 100)

auto_mixed_precision(model, 'O3')

def forward_fn(pde_data, ic_data, bc_data):

loss = problem.get_loss(pde_data, ic_data, bc_data)

if use_ascend:

loss = loss_scaler.scale(loss)

return loss

grad_fn = mindspore.value_and_grad(forward_fn, None, optimizer.parameters, has_aux=False)

@jit

def train_step(pde_data, ic_data, bc_data):

loss, grads = grad_fn(pde_data, ic_data, bc_data)

if use_ascend:

loss = loss_scaler.unscale(loss)

if all_finite(grads):

grads = loss_scaler.unscale(grads)

loss = ops.depend(loss, optimizer(grads))

else:

loss = ops.depend(loss, optimizer(grads))

return loss

epochs = config["train_epochs"]

steps_per_epochs = train_dataset.get_dataset_size()

sink_process = mindspore.data_sink(train_step, train_dataset, sink_size=1)

for epoch in range(1, 1 + epochs):

# train

time_beg = time.time()

model.set_train(True)

for _ in range(steps_per_epochs):

step_train_loss = sink_process()

model.set_train(False)

if epoch % config["eval_interval_epochs"] == 0:

print(f"epoch: {epoch} train loss: {step_train_loss} epoch time: {(time.time() - time_beg) * 1000 :.3f} ms")

calculate_l2_error(model, inputs, label, config)

[3]:

start_time = time.time()

train()

print("End-to-End total time: {} s".format(time.time() - start_time))

momentum_x: u(x, y, t)*Derivative(u(x, y, t), x) + v(x, y, t)*Derivative(u(x, y, t), y) + Derivative(p(x, y, t), x) + Derivative(u(x, y, t), t) - 1.0*Derivative(u(x, y, t), (x, 2)) - 1.0*Derivative(u(x, y, t), (y, 2))

Item numbers of current derivative formula nodes: 6

momentum_y: u(x, y, t)*Derivative(v(x, y, t), x) + v(x, y, t)*Derivative(v(x, y, t), y) + Derivative(p(x, y, t), y) + Derivative(v(x, y, t), t) - 1.0*Derivative(v(x, y, t), (x, 2)) - 1.0*Derivative(v(x, y, t), (y, 2))

Item numbers of current derivative formula nodes: 6

continuty: Derivative(u(x, y, t), x) + Derivative(v(x, y, t), y)

Item numbers of current derivative formula nodes: 2

ic_u: u(x, y, t) + sin(y)*cos(x)

Item numbers of current derivative formula nodes: 2

ic_v: v(x, y, t) - sin(x)*cos(y)

Item numbers of current derivative formula nodes: 2

ic_p: p(x, y, t) + 0.25*cos(2*x) + 0.25*cos(2*y)

Item numbers of current derivative formula nodes: 3

bc_u: u(x, y, t) + exp(-2*t)*sin(y)*cos(x)

Item numbers of current derivative formula nodes: 2

bc_v: v(x, y, t) - exp(-2*t)*sin(x)*cos(y)

Item numbers of current derivative formula nodes: 2

bc_p: p(x, y, t) + 0.25*exp(-4*t)*cos(2*x) + 0.25*exp(-4*t)*cos(2*y)

Item numbers of current derivative formula nodes: 3

epoch: 20 train loss: 0.11818831 epoch time: 9838.472 ms

predict total time: 342.714786529541 ms

l2_error, U: 0.7095809547153462 , V: 0.7081305150496081 , P: 1.004580707024092 , Total: 0.7376210740866216

==================================================================================================

epoch: 40 train loss: 0.025397364 epoch time: 9853.950 ms

predict total time: 67.26336479187012 ms

l2_error, U: 0.09177234501446464 , V: 0.14504987645942635 , P: 1.0217915750380309 , Total: 0.3150453016208772

==================================================================================================

epoch: 60 train loss: 0.0049396083 epoch time: 10158.307 ms

predict total time: 121.54984474182129 ms

l2_error, U: 0.08648064925211238 , V: 0.07875554509736878 , P: 0.711385847511365 , Total: 0.2187113170206073

==================================================================================================

epoch: 80 train loss: 0.0018874758 epoch time: 10349.795 ms

predict total time: 85.42561531066895 ms

l2_error, U: 0.08687053366212526 , V: 0.10624717784645109 , P: 0.3269822261697911 , Total: 0.1319986181134018

==================================================================================================

......

epoch: 460 train loss: 0.00015093417 epoch time: 9928.474 ms

predict total time: 81.79974555969238 ms

l2_error, U: 0.033782269766829076 , V: 0.025816595720090357 , P: 0.08782072926563861 , Total: 0.03824859644715835

==================================================================================================

epoch: 480 train loss: 6.400551e-05 epoch time: 9956.549 ms

predict total time: 104.77519035339355 ms

l2_error, U: 0.02242134127961232 , V: 0.021098481157660533 , P: 0.06210985820202502 , Total: 0.027418651376509482

==================================================================================================

epoch: 500 train loss: 8.7400025e-05 epoch time: 10215.720 ms

predict total time: 77.20041275024414 ms

l2_error, U: 0.021138056243295636 , V: 0.013343674071961624 , P: 0.045241559122240635 , Total: 0.02132725837819097

==================================================================================================

End-to-End total time: 5011.718255519867 s

模型推理及可视化

[4]:

from src import visual

# visualization

visual(model=model, epoch=config["train_epochs"], input_data=inputs, label=label)

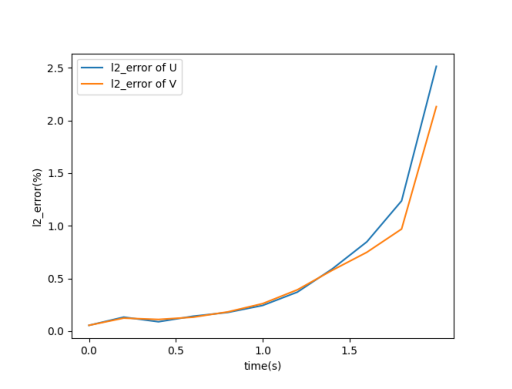

因速度呈指数下降的趋势,随着时间推移,误差变大,但整体处于5%的误差范围内。