Implementing an Image Classification Application

Windows Linux Android C++ Whole Process Model Converting Model Loading Inference Data Preparation Beginner Intermediate Expert

Overview

It is recommended that you start from the image classification demo on the Android device to understand how to build the MindSpore Lite application project, configure dependencies, and use related APIs.

This tutorial demonstrates the on-device deployment process based on the image classification sample program on the Android device provided by the MindSpore team.

Select an image classification model.

Convert the model into a MindSpore Lite model.

Use the MindSpore Lite inference model on the device. The following describes how to use the MindSpore Lite C++ APIs (Android JNIs) and MindSpore Lite image classification models to perform on-device inference, classify the content captured by a device camera, and display the most possible classification result on the application’s image preview screen.

Click to find Android image classification models and image classification sample code.

In this example, we explain how to use C++ API. Besides, MindSpore Lite also supports Java API. Please refer to image segmentation demo to learn more about Java API.

We provide the APK file corresponding to this example. You can scan the QR code below or download the APK file directly, and deploy it to Android devices for use.

Selecting a Model

The MindSpore team provides a series of preset device models that you can use in your application.

Click to download image classification models in MindSpore ModelZoo.

In addition, you can use the preset model to perform transfer learning to implement your image classification tasks.

Converting a Model

After you retrain a model provided by MindSpore, export the model in the .mindir format. Use the MindSpore Lite model conversion tool to convert the .mindir format to a .ms model.

Take the mobilenetv2 model as an example. Execute the following script to convert a model into a MindSpore Lite model for on-device inference.

./converter_lite --fmk=MINDIR --modelFile=mobilenetv2.mindir --outputFile=mobilenetv2.ms

Deploying an Application

The following section describes how to build and execute an on-device image classification task on MindSpore Lite.

Running Dependencies

Android Studio 3.2 or later and Android 4.0 or later is recommended.

Native development kit (NDK) 21.3

CMake 3.10.2

Android software development kit (SDK) 26 or later

JDK 1.8 or later

Building and Running

Load the sample source code to Android Studio and install the corresponding SDK. (After the SDK version is specified, Android Studio automatically installs the SDK.)

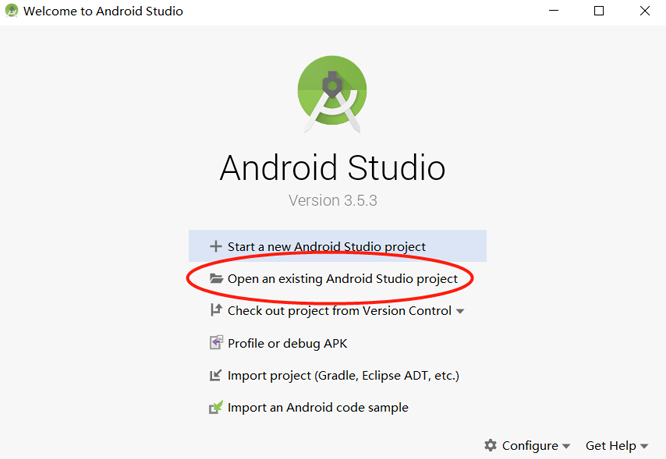

Start Android Studio, click

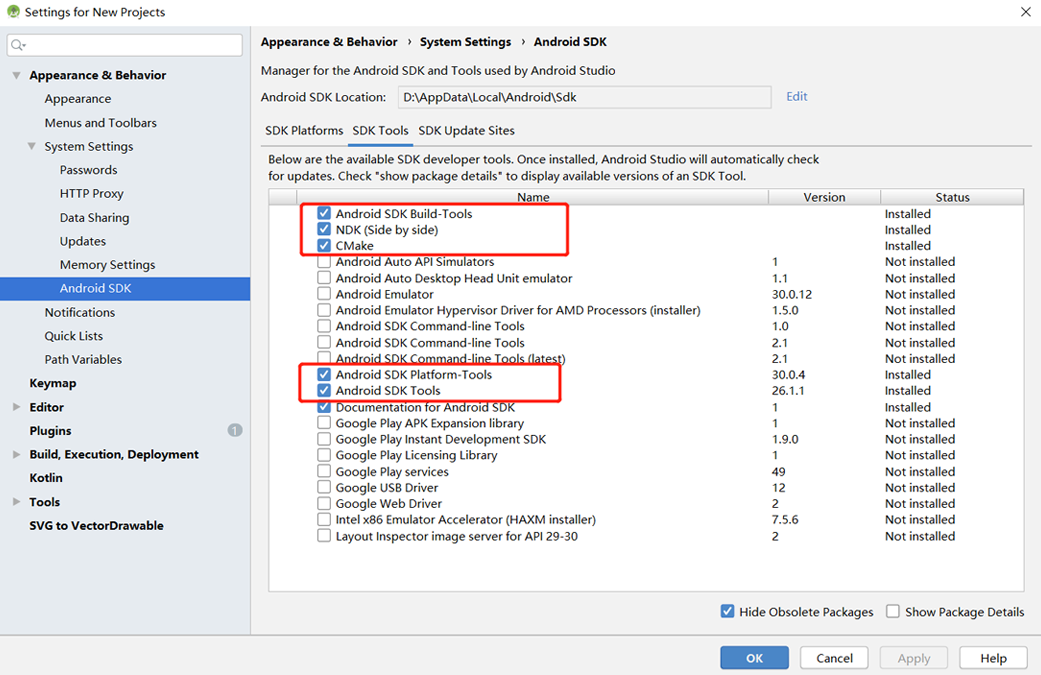

File > Settings > System Settings > Android SDK, and select the corresponding SDK. As shown in the following figure, select an SDK and clickOK. Android Studio automatically installs the SDK.

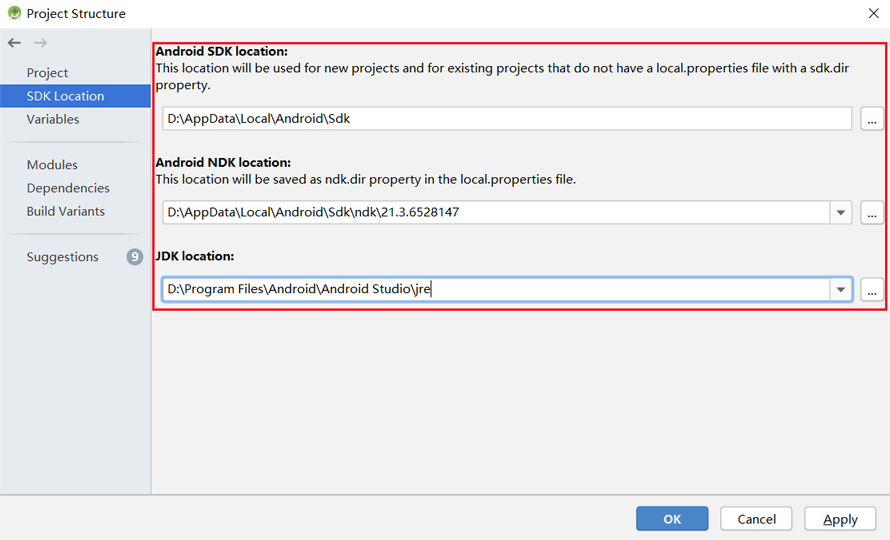

(Optional) If an NDK version issue occurs during the installation, manually download the corresponding NDK version (the version used in the sample code is 21.3). Specify the NDK location in

Android NDK locationofProject Structure.

Connect to an Android device and runs the image classification application.

Connect to the Android device through a USB cable for debugging. Click

Run 'app'to run the sample project on your device.For details about how to connect the Android Studio to a device for debugging, see https://developer.android.com/studio/run/device.

The mobile phone needs to turn on “USB debugging mode” for Android Studio to recognize the phone. In general, Huawei mobile phones turn on “USB debugging mode” in Settings -> System and Update -> Developer Options -> USB Debugging.

Continue the installation on the Android device. After the installation is complete, you can view the content captured by a camera and the inference result.

Detailed Description of the Sample Program

This image classification sample program on the Android device includes a Java layer and a JNI layer. At the Java layer, the Android Camera 2 API is used to enable a camera to obtain image frames and process images. At the JNI layer, the model inference process is completed in Runtime.

This following describes the JNI layer implementation of the sample program. At the Java layer, the Android Camera 2 API is used to enable a device camera and process image frames. Readers are expected to have the basic Android development knowledge.

Sample Program Structure

app

│

├── src/main

│ ├── assets # resource files

| | └── model # model files

| | └── mobilenetv2.ms # stored model file

│ |

│ ├── cpp # main logic encapsulation classes for model loading and prediction

| | |── ...

| | ├── mindspore-lite-{version}-inference-android #MindSpore Lite version

| | ├── MindSporeNetnative.cpp # JNI methods related to MindSpore calling

│ | └── MindSporeNetnative.h # header file

│ |

│ ├── java # application code at the Java layer

│ │ └── com.mindspore.classification

│ │ ├── gallery.classify # implementation related to image processing and MindSpore JNI calling

│ │ │ └── ...

│ │ └── widget # implementation related to camera enabling and drawing

│ │ └── ...

│ │

│ ├── res # resource files related to Android

│ └── AndroidManifest.xml # Android configuration file

│

├── CMakeList.txt # CMake compilation entry file

│

├── build.gradle # Other Android configuration file

├── download.gradle # MindSpore version download

└── ...

Configuring MindSpore Lite Dependencies

When MindSpore C++ APIs are called at the Android JNI layer, related library files are required. You can use MindSpore Lite source code compilation to generate the MindSpore Lite version. In this case, you need to use the compile command of generate with image preprocessing module.

In this example, the build process automatically downloads the mindspore-lite-{version}-inference-android by the app/download.gradle file and saves in the app/src/main/cpp directory.

Note: if the automatic download fails, please manually download the relevant library files mindspore-lite-{version}-inference-android.tar.gz and put them in the corresponding location.

android{

defaultConfig{

externalNativeBuild{

cmake{

arguments "-DANDROID_STL=c++_shared"

}

}

ndk{

abiFilters'armeabi-v7a', 'arm64-v8a'

}

}

}

Create a link to the .so library file in the app/CMakeLists.txt file:

# ============== Set MindSpore Dependencies. =============

include_directories(${CMAKE_SOURCE_DIR}/src/main/cpp)

include_directories(${CMAKE_SOURCE_DIR}/src/main/cpp/${MINDSPORELITE_VERSION}/third_party/flatbuffers/include)

include_directories(${CMAKE_SOURCE_DIR}/src/main/cpp/${MINDSPORELITE_VERSION}/third_party/hiai_ddk/lib/aarch64)

include_directories(${CMAKE_SOURCE_DIR}/src/main/cpp/${MINDSPORELITE_VERSION})

include_directories(${CMAKE_SOURCE_DIR}/src/main/cpp/${MINDSPORELITE_VERSION}/include)

include_directories(${CMAKE_SOURCE_DIR}/src/main/cpp/${MINDSPORELITE_VERSION}/include/ir/dtype)

include_directories(${CMAKE_SOURCE_DIR}/src/main/cpp/${MINDSPORELITE_VERSION}/include/schema)

include_directories(${CMAKE_SOURCE_DIR}/src/main/cpp/${MINDSPORELITE_VERSION}/minddata/include)

add_library(mindspore-lite SHARED IMPORTED)

add_library(minddata-lite SHARED IMPORTED)

add_library(hiai SHARED IMPORTED)

add_library(hiai_ir SHARED IMPORTED)

add_library(hiai_ir_build SHARED IMPORTED)

set_target_properties(mindspore-lite PROPERTIES IMPORTED_LOCATION

${CMAKE_SOURCE_DIR}/src/main/cpp/${MINDSPORELITE_VERSION}/lib/aarch64/libmindspore-lite.so)

set_target_properties(minddata-lite PROPERTIES IMPORTED_LOCATION

${CMAKE_SOURCE_DIR}/src/main/cpp/${MINDSPORELITE_VERSION}/minddata/lib/aarch64/libminddata-lite.so)

set_target_properties(hiai PROPERTIES IMPORTED_LOCATION

${CMAKE_SOURCE_DIR}/src/main/cpp/${MINDSPORELITE_VERSION}/third_party/hiai_ddk/lib/aarch64/libhiai.so)

set_target_properties(hiai_ir PROPERTIES IMPORTED_LOCATION

${CMAKE_SOURCE_DIR}/src/main/cpp/${MINDSPORELITE_VERSION}/third_party/hiai_ddk/lib/aarch64/libhiai_ir.so)

set_target_properties(hiai_ir_build PROPERTIES IMPORTED_LOCATION

${CMAKE_SOURCE_DIR}/src/main/cpp/${MINDSPORELITE_VERSION}/third_party/hiai_ddk/lib/aarch64/libhiai_ir_build.so)

# --------------- MindSpore Lite set End. --------------------

# Link target library.

target_link_libraries(

...

# --- mindspore ---

minddata-lite

mindspore-lite

hiai

hiai_ir

hiai_ir_build

...

)

Downloading and Deploying a Model File

In this example, the build process automatically downloads the mobilenetv2.ms by referring to the app/download.gradle file and saves in the app/src/main/assets/model directory.

Note: if the automatic download fails, please manually download the relevant library files mobilenetv2.ms and put them in the corresponding location.

Writing On-Device Inference Code

Call MindSpore Lite C++ APIs at the JNI layer to implement on-device inference.

The inference process code is as follows. For details about the complete code, see src/cpp/MindSporeNetnative.cpp.

Load the MindSpore Lite model file and build the context, session, and computational graph for inference.

Load model file:

Read the model file in the Java layer of Android and convert it into a ByteBuffer type file

model _ Buffer, which is transferred to C++ layer by calling JNI. Finally, themodel_ Bufferis converted to char type filemodelbuffer.// Buffer is the model data passed in by the Java layer jlong bufferLen = env->GetDirectBufferCapacity(model_buffer); if (0 == bufferLen) { MS_PRINT("error, bufferLen is 0!"); return (jlong) nullptr; } char *modelBuffer = CreateLocalModelBuffer(env, model_buffer); if (modelBuffer == nullptr) { MS_PRINT("modelBuffer create failed!"); return (jlong) nullptr; }

Build context, session, and computational graph for inference:

Build context and set session parameters. Create a session from context and model data.

// To create a Mindspore network inference environment. void **labelEnv = new void *; MSNetWork *labelNet = new MSNetWork; *labelEnv = labelNet; mindspore::lite::Context *context = new mindspore::lite::Context; context->thread_num_ = num_thread; context->device_list_[0].device_info_.cpu_device_info_.cpu_bind_mode_ = mindspore::lite::NO_BIND; context->device_list_[0].device_info_.cpu_device_info_.enable_float16_ = false; context->device_list_[0].device_type_ = mindspore::lite::DT_CPU; labelNet->CreateSessionMS(modelBuffer, bufferLen, context); delete context;

Based on the model file

modelBuffer, the computational graph for inference is constructed.void MSNetWork::CreateSessionMS(char *modelBuffer, size_t bufferLen, mindspore::lite::Context *ctx) { session_ = mindspore::session::LiteSession::CreateSession(ctx); if (session_ == nullptr) { MS_PRINT("Create Session failed."); return; } // Compile model. model_ = mindspore::lite::Model::Import(modelBuffer, bufferLen); if (model_ == nullptr) { ReleaseNets(); MS_PRINT("Import model failed."); return; } int ret = session_->CompileGraph(model_); if (ret != mindspore::lite::RET_OK) { ReleaseNets(); MS_PRINT("CompileGraph failed."); return; } }

Convert the input image into the Tensor format of the MindSpore model.

Cut the size of the image

srcbitmapto be detected and convert it to LiteMat formatlite_norm_mat_cut. The width, height and channel number information are converted into float format datadataHWC. Finally, copy thedataHWCto the inputinTensorof MindSpore model.if (!BitmapToLiteMat(env, srcBitmap, &lite_mat_bgr)) { MS_PRINT("BitmapToLiteMat error"); return NULL; } if (!PreProcessImageData(lite_mat_bgr, &lite_norm_mat_cut)) { MS_PRINT("PreProcessImageData error"); return NULL; } ImgDims inputDims; inputDims.channel = lite_norm_mat_cut.channel_; inputDims.width = lite_norm_mat_cut.width_; inputDims.height = lite_norm_mat_cut.height_; // Get the MindSpore inference environment which created in loadModel(). void **labelEnv = reinterpret_cast<void **>(netEnv); if (labelEnv == nullptr) { MS_PRINT("MindSpore error, labelEnv is a nullptr."); return NULL; } MSNetWork *labelNet = static_cast<MSNetWork *>(*labelEnv); auto mSession = labelNet->session(); if (mSession == nullptr) { MS_PRINT("MindSpore error, Session is a nullptr."); return NULL; } MS_PRINT("MindSpore get session."); auto msInputs = mSession->GetInputs(); if (msInputs.size() == 0) { MS_PRINT("MindSpore error, msInputs.size() equals 0."); return NULL; } auto inTensor = msInputs.front(); float *dataHWC = reinterpret_cast<float *>(lite_norm_mat_cut.data_ptr_); // Copy dataHWC to the model input tensor. memcpy(inTensor->MutableData(), dataHWC, inputDims.channel * inputDims.width * inputDims.height * sizeof(float));

Adjust the size of the input image, as well as the detailed algorithm of data processing.

bool PreProcessImageData(const LiteMat &lite_mat_bgr, LiteMat *lite_norm_mat_ptr) { bool ret = false; LiteMat lite_mat_resize; LiteMat &lite_norm_mat_cut = *lite_norm_mat_ptr; ret = ResizeBilinear(lite_mat_bgr, lite_mat_resize, 256, 256); if (!ret) { MS_PRINT("ResizeBilinear error"); return false; } LiteMat lite_mat_convert_float; ret = ConvertTo(lite_mat_resize, lite_mat_convert_float, 1.0 / 255.0); if (!ret) { MS_PRINT("ConvertTo error"); return false; } LiteMat lite_mat_cut; ret = Crop(lite_mat_convert_float, lite_mat_cut, 16, 16, 224, 224); if (!ret) { MS_PRINT("Crop error"); return false; } std::vector<float> means = {0.485, 0.456, 0.406}; std::vector<float> stds = {0.229, 0.224, 0.225}; SubStractMeanNormalize(lite_mat_cut, lite_norm_mat_cut, means, stds); return true; }

The input tensor is inferred according to the model, and the output tensor is obtained and post processed.

The graph and model are loaded and on device inference is performed.

// After the model and image tensor data is loaded, run inference. auto status = mSession->RunGraph();

Get the tensor output

msOutputsof MindSpore model. The text informationresultCharDatadisplayed in the APP is calculated throughmsOutputsand classification array information.auto names = mSession->GetOutputTensorNames(); std::unordered_map<std::string, mindspore::tensor::MSTensor *> msOutputs; for (const auto &name : names) { auto temp_dat =mSession->GetOutputByTensorName(name); msOutputs.insert(std::pair<std::string, mindspore::tensor::MSTensor *> {name, temp_dat}); } std::string resultStr = ProcessRunnetResult(::RET_CATEGORY_SUM,::labels_name_map, msOutputs); const char *resultCharData = resultStr.c_str(); return (env)->NewStringUTF(resultCharData);

Perform post-processing of the output data. Obtain the output object

outputTensorthroughmsOutputs, and parse it with the thing category arraylabels_name_mapto obtain the training score arrayscores[]of each element. Set the credibility threshold value tounifiedThre, and count the credibility threshold value according to the training data. Above the threshold, it belongs to this type. On the contrary, it is not. Finally, a corresponding category name and corresponding score datacategoryScoreare returned.std::string ProcessRunnetResult(const int RET_CATEGORY_SUM, const char *const labels_name_map[], std::unordered_map<std::string, mindspore::tensor::MSTensor *> msOutputs) { // Get the branch of the model output. // Use iterators to get map elements. std::unordered_map<std::string, mindspore::tensor::MSTensor *>::iterator iter; iter = msOutputs.begin(); // The mobilenetv2.ms model output just one branch. auto outputTensor = iter->second; int tensorNum = outputTensor->ElementsNum(); MS_PRINT("Number of tensor elements:%d", tensorNum); // Get a pointer to the first score. float *temp_scores = static_cast<float *>(outputTensor->MutableData()); float scores[RET_CATEGORY_SUM]; for (int i = 0; i < RET_CATEGORY_SUM; ++i) { scores[i] = temp_scores[i]; } const float unifiedThre = 0.5; const float probMax = 1.0; for (size_t i = 0; i < RET_CATEGORY_SUM; ++i) { float threshold = g_thres_map[i]; float tmpProb = scores[i]; if (tmpProb < threshold) { tmpProb = tmpProb / threshold * unifiedThre; } else { tmpProb = (tmpProb - threshold) / (probMax - threshold) * unifiedThre + unifiedThre; } scores[i] = tmpProb; } // Score for each category. // Converted to text information that needs to be displayed in the APP. std::string categoryScore = ""; for (int i = 0; i < RET_CATEGORY_SUM; ++i) { categoryScore += labels_name_map[i]; categoryScore += ":"; std::string score_str = std::to_string(scores[i]); categoryScore += score_str; categoryScore += ";"; } return categoryScore; }