用于时空PDE系统的物理编码消息传递图神经网络

偏微分方程(PDEs)控制的复杂动力系统广泛存在于各个学科当中。近年来,数据驱动的神经网络模型在预测时空动态上取得了极好的效果。

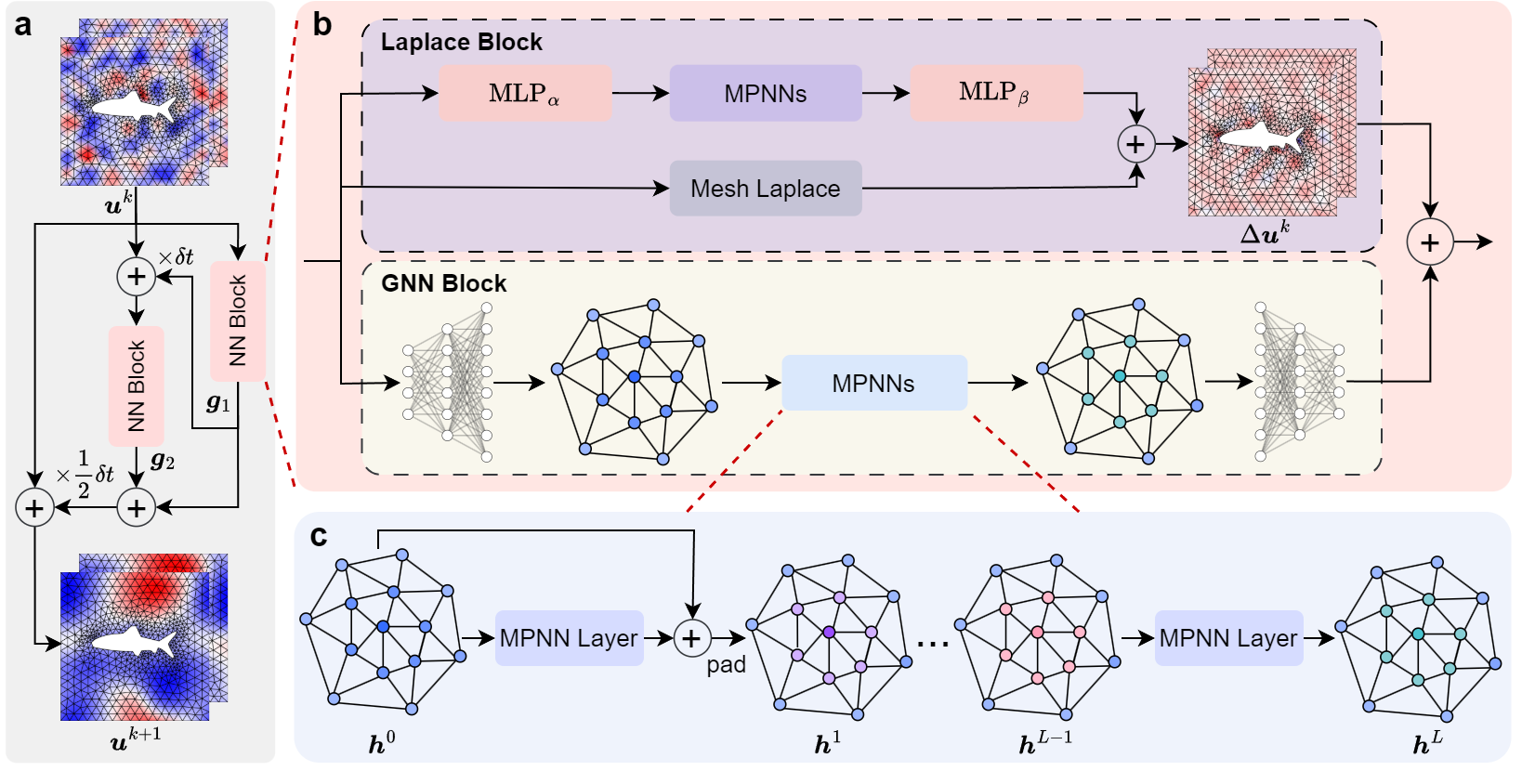

物理编码的消息传递图网络(PhyMPGN: Physics-encoded Message Passing Graph Network for spatiotemporal PDE systems),可以使用少量训练数据在不规则计算域上建模时空PDE系统。具体来说,

提出了一个使用消息传递机制的物理编码图学习模型,使用二阶龙格库塔(Runge-Kutta)数值方案进行时间步进

考虑到物理现象中普遍存在扩散过程,设计了一个可学习的Laplace Block,编码了离散拉普拉斯-贝尔特拉米算子(Laplace-Beltrami Operator)

提出了一个新颖的填充策略在模型中编码不同类型的边界条件

论文链接: https://arxiv.org/abs/2410.01337

问题描述

考虑由如下形式控制的时空PDE系统:

其中\(\boldsymbol u(\boldsymbol x, y) \in \mathbb{R}^m\)是具有\(m\)个分量的状态变量向量,例如速度、温度或者压力等,它的定义在时空域\(\{ \boldsymbol x, t \} \in \Omega \times [0, \mathcal{T}]\)上;\(\dot{\boldsymbol u}\)代表\(\boldsymbol u\)对时间的导数,\(\boldsymbol F\)是依赖于当前状态\(\boldsymbol u\)和其空间导数的非线性算子。

假设在空间域\(\Omega\)上有着非均匀且稀疏的观测结点\(\{ \boldsymbol x_0, \dots, \boldsymbol x_{N-1} \}\)(即,非结构化网格),在时刻\(t_0, \dots, t_{T-1}\),这些结点上的观测为\(\{ \boldsymbol U(t_0), \dots, \boldsymbol U(t_{T-1}) \}\),其中的\(\boldsymbol U(t_i) = \{ \boldsymbol u(\boldsymbol x_0, t_i), \dots, \boldsymbol u (\boldsymbol x_{N-1}, t_i) \}\)代表某些物理量。考虑到很多物理现象包含扩散过程,我们假设PDE中的扩散项是已知的先验知识。我们的目标是使用少量训练数据学习一个图神经网络模型,在稀疏非结构网格上预测不同的时空动态系统,处理不同的边界条件,为任意的初始条件产生后续动态轨迹。

本案例展示PhyMPGN如何求解圆柱绕流(Cylinder Flow)问题。

圆柱绕流 (Cylinder Flow) 动态系统由如下的Navier-Stokes方程控制

其中流体密度\(\rho=1\),流体粘度系数\(\mu=5.0\times10^{-3}\),外力\(f=0\)。该圆柱绕流系统左边界为入口,右边界为出口,圆柱表面为无滑移边界条件,上下边界为对称边界条件。本案例关注于,在保持流体密度\(\rho\),圆柱大小\(D=2\)和流体粘度系数\(\mu\)不变的情况下,泛化入射流速度\(U_m\)。因为雷诺数定义为\(Re=\rho U_m D / \mu\),所以泛化入射流速度\(U_m\)也意味着泛化不同的雷诺数。

模型方法

对于式(1),可以使用二阶龙格库塔(Runge-Kutta, RK2)方案进行离散:

其中\(\boldsymbol u^k\)为\(t^k\)时刻的状态变量,\(\delta t\)为时刻\(t^k\)和\(t^{k+1}\)之间的时间间隔。根据式(3),我们构建一个GNN来学习非线性算子\(\boldsymbol F\).

如图所示,我们使用NN block来学习非线性算子\(\boldsymbol F\)。NN block又可以分为两部分:采用编码器-处理器-解码器架构的GNN block和可学习的Laplace block。因为物理现象中扩散过程的普遍存在性,我们设计了可学习的Laplace block,编码离散拉普拉斯贝尔特拉米算子(Laplace-Beltrami operator),来学习由PDE中扩散项导致的增量;而GNN block来学习PDE中其他项导致的增量。

准备环节

确保已安装相关依赖库,如MindSpore等

确保已下载好圆柱绕流数据

确保在yamls/train.yaml配置文件中已配置好数据和模型权重等相关保存路径

代码执行步骤

代码执行流程如下步骤:

读取配置文件

构建数据集

构建模型

模型训练

模型推理

读取配置文件

[1]:

from mindflow.utils import log_config, load_yaml_config, print_log

from easydict import EasyDict

import os.path as osp

from pathlib import Path

def load_config(config_file_path, train):

config = load_yaml_config(config_file_path)

config['train'] = train

config = EasyDict(config)

log_dir = './logs'

if train:

log_file = f'phympgn-{config.experiment_name}'

else:

log_file = f'phympgn-{config.experiment_name}-te'

if not osp.exists(osp.join(log_dir, f'{log_file}.log')):

Path(osp.join(log_dir, f'{log_file}.log')).touch()

log_config(log_dir, log_file)

print_log(config)

return config

[ ]:

config_file_path = 'yamls/train.yaml'

config = load_config(config_file_path=config_file_path, train=True)

[ ]:

import mindspore as ms

ms.set_device(device_target='Ascend', device_id=7)

构建数据集

[ ]:

from src import PDECFDataset, get_data_loader

print_log('Train...')

print_log('Loading training data...')

tr_dataset = PDECFDataset(

root=config.path.data_root_dir,

raw_files=config.path.tr_raw_data,

dataset_start=config.data.dataset_start,

dataset_used=config.data.dataset_used,

time_start=config.data.time_start,

time_used=config.data.time_used,

window_size=config.data.tr_window_size,

training=True

)

tr_loader = get_data_loader(

dataset=tr_dataset,

batch_size=config.optim.batch_size

)

print_log('Loading validation data...')

val_dataset = PDECFDataset(

root=config.path.data_root_dir,

raw_files=config.path.val_raw_data,

dataset_start=config.data.dataset_start,

dataset_used=config.data.dataset_used,

time_start=config.data.time_start,

time_used=config.data.time_used,

window_size=config.data.val_window_size

)

val_loader = get_data_loader(

dataset=val_dataset,

batch_size=config.optim.batch_size

)

构建模型

[ ]:

from src import PhyMPGN

print_log('Building model...')

model = PhyMPGN(

encoder_config=config.network.encoder_config,

mpnn_block_config=config.network.mpnn_block_config,

decoder_config=config.network.decoder_config,

laplace_block_config=config.network.laplace_block_config,

integral=config.network.integral

)

print_log(f'Number of parameters: {model.num_params}')

模型训练

[ ]:

from mindflow import get_multi_step_lr

from mindspore import nn

import numpy as np

from src import Trainer, TwoStepLoss

lr_scheduler = get_multi_step_lr(

lr_init=config.optim.lr,

milestones=list(np.arange(0, config.optim.start_epoch+config.optim.epochs,

step=config.optim.steplr_size)[1:]),

gamma=config.optim.steplr_gamma,

steps_per_epoch=len(tr_loader),

last_epoch=config.optim.start_epoch+config.optim.epochs-1

)

optimizer = nn.AdamWeightDecay(model.trainable_params(), learning_rate=lr_scheduler,

eps=1.0e-8, weight_decay=1.0e-2)

trainer = Trainer(

model=model, optimizer=optimizer, scheduler=lr_scheduler, config=config,

loss_func=TwoStepLoss()

)

trainer.train(tr_loader, val_loader)

[Epoch 1/1600] Batch Time: 2.907 (3.011) Data Time: 0.021 (0.035) Graph Time: 0.004 (0.004) Grad Time: 2.863 (2.873) Optim Time: 0.006 (0.022)

[Epoch 1/1600] Batch Time: 1.766 (1.564) Data Time: 0.022 (0.044) Graph Time: 0.003 (0.004)

[Epoch 1/1600] tr_loss: 1.36e-02 val_loss: 1.29e-02 [MIN]

[Epoch 2/1600] Batch Time: 3.578 (3.181) Data Time: 0.024 (0.038) Graph Time: 0.004 (0.004) Grad Time: 3.531 (3.081) Optim Time: 0.004 (0.013)

[Epoch 2/1600] Batch Time: 1.727 (1.664) Data Time: 0.023 (0.042) Graph Time: 0.003 (0.004)

[Epoch 2/1600] tr_loss: 1.15e-02 val_loss: 9.55e-03 [MIN]

…

模型推理

[ ]:

config_file_path = 'yamls/train.yaml'

config = load_config(config_file_path=config_file_path, train=False)

[ ]:

import mindspore as ms

ms.set_device(device_target='Ascend', device_id=7)

[ ]:

from src import PDECFDataset, get_data_loader, Trainer, PhyMPGN

from mindspore import nn

# test datasets

te_dataset = PDECFDataset(

root=config.path.data_root_dir,

raw_files=config.path.te_raw_data,

dataset_start=config.data.te_dataset_start,

dataset_used=config.data.te_dataset_used,

time_start=config.data.time_start,

time_used=config.data.time_used,

window_size=config.data.te_window_size,

training=False

)

te_loader = get_data_loader(

dataset=te_dataset,

batch_size=1,

shuffle=False,

)

print_log('Building model...')

model = PhyMPGN(

encoder_config=config.network.encoder_config,

mpnn_block_config=config.network.mpnn_block_config,

decoder_config=config.network.decoder_config,

laplace_block_config=config.network.laplace_block_config,

integral=config.network.integral

)

print_log(f'Number of parameters: {model.num_params}')

trainer = Trainer(

model=model, optimizer=None, scheduler=None, config=config,

loss_func=nn.MSELoss()

)

print_log('Test...')

trainer.test(te_loader)

.[TEST 0/9] MSE at 2000t: 5.06e-04, armse: 0.058, time: 185.3432s

[TEST 1/9] MSE at 2000t: 4.83e-04, armse: 0.040, time: 186.3979s

[TEST 2/9] MSE at 2000t: 1.95e-03, armse: 0.062, time: 177.0030s

…

[TEST 8/9] MSE at 2000t: 1.42e-01, armse: 0.188, time: 163.1219s

[TEST 9] Mean Loss: 4.88e-02, Mean armse: 0.137, corre: 0.978, time: 173.3827s