mindspore.nn.Softsign

- class mindspore.nn.Softsign[source]

Applies softsign activation function element-wise.

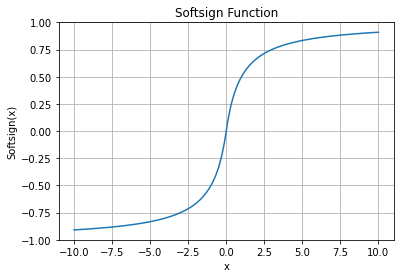

Softsign Activation Function Graph:

Refer to

mindspore.ops.softsign()for more details.- Supported Platforms:

AscendGPUCPU

Examples

>>> import mindspore >>> from mindspore import Tensor, nn >>> import numpy as np >>> x = Tensor(np.array([0, -1, 2, 30, -30]), mindspore.float32) >>> softsign = nn.Softsign() >>> output = softsign(x) >>> print(output) [ 0. -0.5 0.6666667 0.9677419 -0.9677419]