mindspore.nn.SoftShrink

- class mindspore.nn.SoftShrink(lambd=0.5)[source]

Apply the SoftShrink function element-wise.

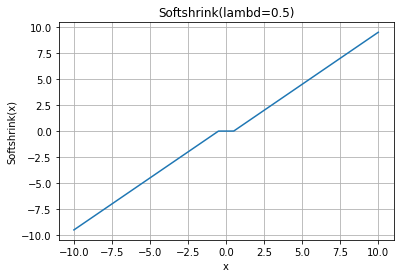

\[\begin{split}\text{SoftShrink}(x) = \begin{cases} x - \lambda, & \text{ if } x > \lambda \\ x + \lambda, & \text{ if } x < -\lambda \\ 0, & \text{ otherwise } \end{cases}\end{split}\]SoftShrink Activation Function Graph:

- Parameters

lambd (number, optional) – The threshold \(\lambda\) defined by the Soft Shrink formula, no less than zero. Default:

0.5.

- Inputs:

input (Tensor) - The input tensor. Supported dtypes:

Ascend: mindspore.float16, mindspore.float32, mindspore.bfloat16.

CPU/GPU: mindspore.float16, mindspore.float32.

- Outputs:

Tensor.

- Supported Platforms:

AscendGPUCPU

Examples

>>> import mindspore >>> input = mindspore.tensor([[ 0.5297, 0.7871, 1.1754], [ 0.7836, 0.6218, -1.1542]], mindspore.float16) >>> softshrink = mindspore.nn.SoftShrink() >>> output = softshrink(input) >>> print(output) [[ 0.02979 0.287 0.676 ] [ 0.2837 0.1216 -0.6543 ]]