mindspore.nn.ReLU6

- class mindspore.nn.ReLU6[source]

Compute ReLU6 activation function element-wise.

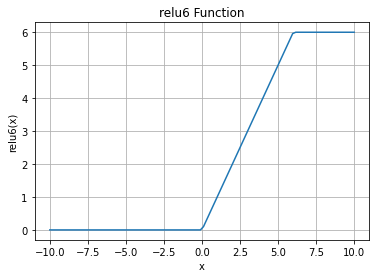

ReLU6 is similar to ReLU with a upper limit of 6, which if the inputs are greater than 6, the outputs will be suppressed to 6. It computes element-wise as

\[Y = \min(\max(0, x), 6)\]ReLU6 Activation Function Graph:

- Inputs:

x (Tensor) - The input of ReLU6 with data type of float16 or float32 and that is a Tensor of any valid shape.

- Outputs:

Tensor, which has the same type as x.

- Raises

TypeError – If dtype of x is neither float16 nor float32.

- Supported Platforms:

AscendGPUCPU

Examples

>>> import mindspore >>> from mindspore import Tensor, nn >>> import numpy as np >>> x = Tensor(np.array([-1, -2, 0, 2, 1]), mindspore.float16) >>> relu6 = nn.ReLU6() >>> output = relu6(x) >>> print(output) [0. 0. 0. 2. 1.]