mindspore.mint.nn.SiLU

- class mindspore.mint.nn.SiLU(inplace=False)[source]

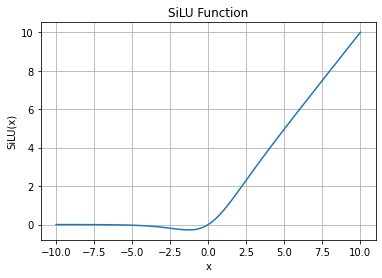

Calculate the SiLU activation function element-wise. It is also sometimes referred to as Swish function.

The SiLU function is defined as follows:

\[\text{SiLU}(x) = x * \sigma(x),\]where \(x_i\) is an element of the input, \(\sigma(x)\) is Sigmoid function.

\[\text{sigmoid}(x_i) = \frac{1}{1 + \exp(-x_i)},\]SiLU Activation Function Graph:

Warning

This is an experimental API that is subject to change or deletion.

- Parameters

inplace (bool, optional) – If it is

True, enable the in-place update function. Default:False.

- Inputs:

input (Tensor) - The input tensor.

- Outputs:

Tensor.

- Supported Platforms:

Ascend

Examples

>>> import mindspore >>> input = mindspore.tensor([-1, 2, -3, 2, -1], mindspore.float16) >>> silu = mindspore.mint.nn.SiLU(inplace=False) >>> output = silu(input) >>> print(output) [-0.269 1.762 -0.1423 1.762 -0.269]