mindspore.mint.nn.SELU

- class mindspore.mint.nn.SELU[source]

Apply SELU (scaled exponential linear unit) element-wise.

Refer to

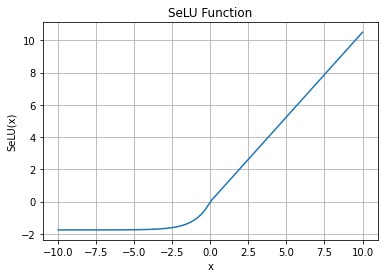

mindspore.mint.nn.functional.selu()for more details.SELU Activation Function Graph:

- Supported Platforms:

Ascend

Examples

>>> import mindspore >>> selu = mindspore.mint.nn.SELU() >>> input_tensor = mindspore.tensor([[-1.0, 4.0, -8.0], [2.0, -5.0, 9.0]], mindspore.float32) >>> output = selu(input_tensor) >>> print(output) [[-1.1113307 4.202804 -1.7575096] [ 2.101402 -1.7462534 9.456309 ]]