mindspore.mint.nn.ReLU

- class mindspore.mint.nn.ReLU[source]

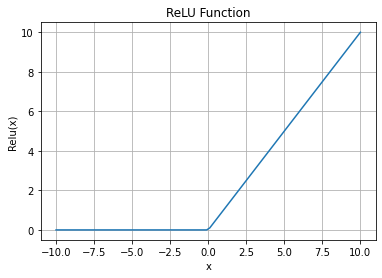

Apply ReLU (rectified linear unit activation function) element-wise.

\[\text{ReLU}(input) = (input)^+ = \max(0, input),\]Compute the element-wise \(\max(0, input)\).

Note

Neurons with negative outputs are suppressed, while active neurons stay the same.

ReLU Activation Function Graph:

- Inputs:

input (Tensor) - The input tensor of any dimension.

- Outputs:

Tensor.

- Supported Platforms:

Ascend

Examples

>>> import mindspore >>> relu = mindspore.mint.nn.ReLU() >>> input_tensor = mindspore.tensor([-1.0, 2.0, -3.0, 2.0, -1.0], mindspore.float32) >>> output = relu(input_tensor) >>> print(output) [0. 2. 0. 2. 0.]